The first thing the team admitted was simple and honest: they weren’t stuck because they lacked data. They were stuck because they couldn’t find it, trust it, and act on it fast enough. Across a global biobank network, clinical context lived in one place, sample details in another, storage records somewhere else, and assay results in yet another tool. Export files didn’t behave like connected tables. Only a few specialists could stitch it all together, and even then, building a cohort with nuanced logic—include this, exclude that, match these pairs, filter by dates and volumes—could take days. Meanwhile, project managers refreshed inboxes, scientists waited, and operations worried about reserving the wrong samples or double‑booking a case.

They chose Scispot because the problem wasn’t a single database; it was findability, trust, and speed of decision. The goal was not to replace everything they owned, but to create one place where their most important questions could finally get straight answers—quickly, consistently, and with confidence.

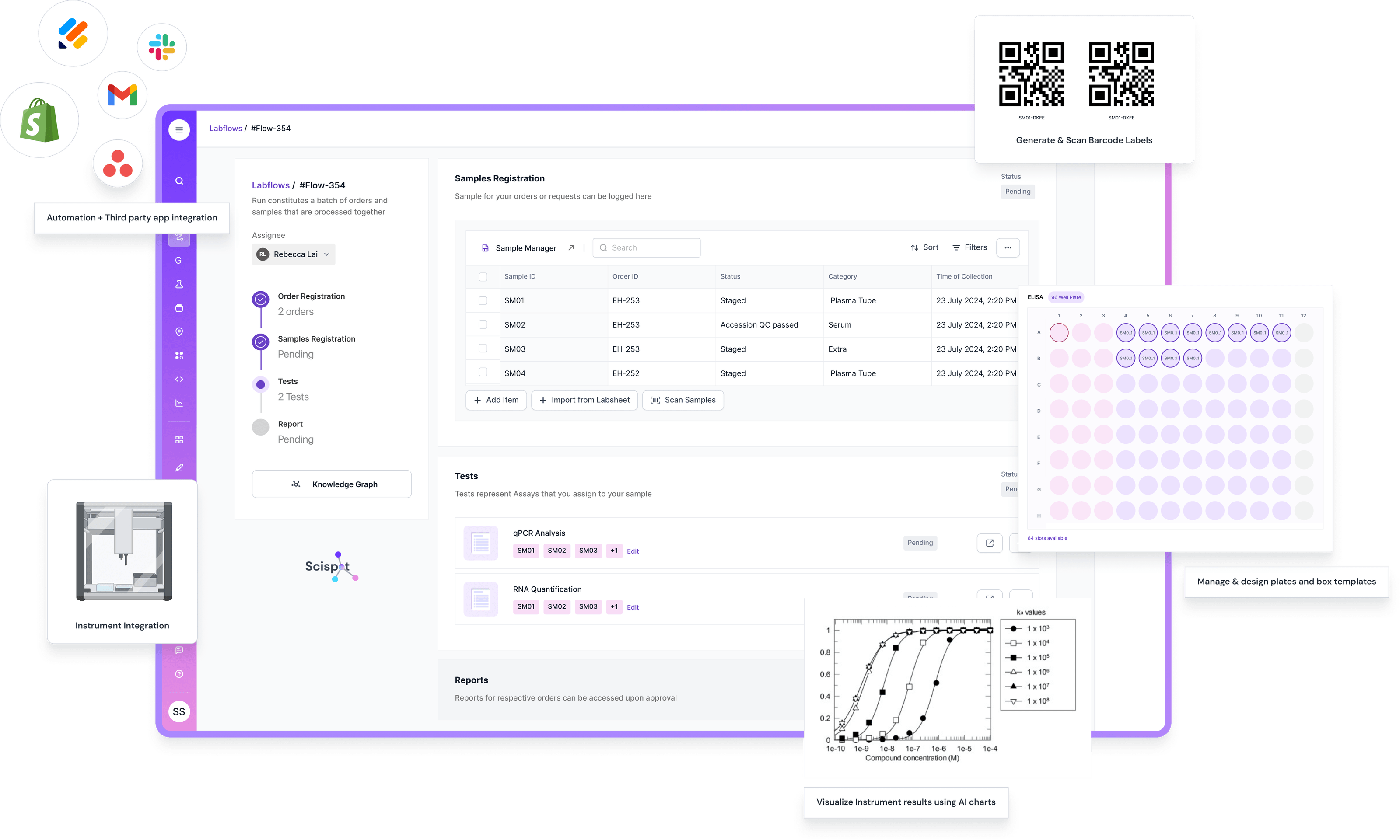

Scispot started by connecting the sources they already had. Using GLUE, the team brought clinical entries, biobank inventories, storage metadata, and assay notes into a unified layer without losing the original granularity or lineage. In Labsheets, each domain felt familiar—like a spreadsheet—but was now harmonized and mapped. The same case that appeared under different IDs across systems was recognized as one entity. The practical effect was profound: a scientist could move from browsing to building a question without wondering whether two rows described the same person or sample.

The day-to-day experience changed inside the Query Manager. People who once relied on a specialist could drag and drop fields, add AND/OR/MINUS logic, and shape nuanced cohorts on their own. They saved dynamic templates for common requests and created frozen, time‑stamped snapshots when an exact result needed to be shared, audited, or rerun later. And if someone preferred plain language, the AI assistant handled that too. A sentence like, “Show sample IDs where the diagnosis code starts with D17 and the storage location includes RR12,” became a structured, explainable filter set. As users typed, Scispot suggested real values from their data—type “F…” and it offers FFPE and Fresh Frozen—recommended the next fields to consider, and chose sensible operators for each field. It felt less like wrestling with a query tool and more like having a guide who knew the shape of their data.

Results arrived in seconds, not hours, and they arrived with context that built trust. Every record carried a match percentage that reflected how closely it fit the chosen criteria. A perfect score meant the sample matched everything; anything less came with a short, human explanation—perhaps the surgery date was just outside the requested window, or a sample group was Serum when FFPE was expected. Busy teams didn’t have to debate a mysterious score; they could read why it wasn’t 100% and decide whether it still worked for the study. The simple clarity of “why not 100%” turned ambiguity into a decision.

Acting on results used to be risky; now it was routine. From the same view, users reserved the exact samples they needed. Scispot handled holds, quantities, and conflicts, preventing double bookings and surfacing safe alternatives when there was overlap. Reservations were logged end to end, with a clear history of who reserved what and why. Compliance no longer felt like a separate project. Role‑based access, field masking for sensitive attributes, and auditable logs lived alongside the work. Snapshots could be e‑signed when needed. If someone asked, “What did we run for this request three months ago?” the answer was a click away, complete with compiled filters and the data sources used at that time.

The emotional shift was noticeable even before the metrics rolled in. Scientists didn’t open their laptops on Monday and braced for a waiting game. They asked for a cohort and watched it appear. Project managers stopped being air‑traffic controllers for ad hoc requests. Operations gained calm, because the system caught conflicts before they turned into awkward calls. People trusted the software not because it was flashy, but because it explained itself. Small wins compounded: a saved template here, a snapshot there, a reservation done correctly the first time.

When leadership reviewed the change, they focused less on technology and more on tempo. What used to drag for days now moves in minutes. Questions that once required a data specialist became everyday work for biologists and coordinators. Cohort definitions, once buried in emails and fragile spreadsheets, became shared assets anyone could rerun. Fewer mismatches, fewer do‑overs, cleaner audits, and a steady drumbeat of “we can do this now.” It’s hard to overstate how much relief that brings to a team under a deadline.

Under the hood, Scispot stayed intentionally high‑level and governed. The unified layer preserved the fidelity of the original sources and respected every rule the organization cared about—codes and vocabularies stayed consistent, units and ranges were normalized, and lineage never disappeared. The AI didn’t guess in ways that put results at risk; it suggested, explained, and adapted as the team worked. And the platform scaled without drama as new sites and tables came online.

The most telling moments were quiet ones. A coordinator who once dreaded complex requests ran a cross‑site query, saw a clean list with match scores, reserved the exact set needed, and sent a time‑stamped snapshot to a client—all before lunch. A scientist iterated on a cohort in front of colleagues, adjusting exclusions on the fly while the AI filled in sensible values, and the room leaned in because the conversation was finally about the study, not the software. Those moments add up. They change how a lab feels.

If your data lives everywhere and your answers live nowhere, you don’t have a data shortage—you have a findability problem. Scispot solves that problem where it matters most: at the point where a scientist asks a real‑world question and needs to act. It brings your sources together, makes queries feel natural, ranks results in a way everyone can understand, and ties discovery to reservation and audit in one flow. For biobanks and research teams who need clarity, speed, and confidence, Scispot is not another place to park data. It’s where work moves forward.

.webp)

.webp)

.webp)